Should You Cache Array.Length and List.Count?

Every time I see for (var i = 0; i < array.Length; ++i) I wonder if accessing that Length property is slow. Should I cache it? It's comforting to know that for (int i = 0, len = array.Length; i < len; ++i) is only dealing with local variables except on the first loop. Local variables must be faster, right? Likewise, I wonder the same thing about List<T>.Count. I finally got around to running a test to see if caching these length properties makes any performance difference. The answers might surprise you!

The test is dead simple: loop over a 100 million element byte[] and List<byte> with caching and no caching of the Length or Count property. Here are the four loops:

// Array.Length for (var i = 0; i < array.Length; ++i) for (int i = 0, len = array.Length; i < len; ++i) // List.Count for (var i = 0; i < list.Count; ++i) for (int i = 0, len = list.Count; i < len; ++i)

But first, let's decompile the Array and List<T> classes to see how these properties are implemented in Unity's mscorlib.dll. What do they do? First, here's Array.Length:

public int Length { [ReliabilityContract(Consistency.WillNotCorruptState, Cer.Success)] get { int num = this.GetLength(0); for (int i = 1; i < this.Rank; i++) { num *= this.GetLength(i); } return num; } } [MethodImpl(MethodImplOptions.InternalCall)] public extern int GetLength(int dimension); public int Rank { [ReliabilityContract(Consistency.WillNotCorruptState, Cer.Success)] get { return this.GetRank(); } } [MethodImpl(MethodImplOptions.InternalCall)] private extern int GetRank();

So Length calls the native (C code) GetLength function. It also calls the Rank property which calls the native GetRank function, just in case this is a multi-dimensional array. This means that getting the Length property involves calling three C# functions and two C functions. Will that make Length expensive or is there some special handling for arrays that speeds this all up? We'll see in a minute when we performance test it.

Next is the decompilation of List<T>.Count from the same DLL:

public int Count { get { return this._size; } }

Wow! It literally just returns a private field! What more could we ask for in terms of performance from the Count property?

Without further ado, let's do the performance test:

using System.Collections.Generic; using UnityEngine; public class TestScript : MonoBehaviour { void Start() { const int size = 100000000; var array = new byte[size]; var list = new List<byte>(size); for (var i = 0; i < size; ++i) { list.Add(0); } var sw = new System.Diagnostics.Stopwatch(); sw.Reset(); sw.Start(); for (var i = 0; i < array.Length; ++i) { } var arrayLengthTime = sw.ElapsedMilliseconds; sw.Reset(); sw.Start(); for (int i = 0, len = array.Length; i < len; ++i) { } var cachedArrayLengthTime = sw.ElapsedMilliseconds; sw.Reset(); sw.Start(); for (var i = 0; i < list.Count; ++i) { } var listCountTime = sw.ElapsedMilliseconds; sw.Reset(); sw.Start(); for (int i = 0, len = list.Count; i < len; ++i) { } var cachedListCountTime = sw.ElapsedMilliseconds; Debug.LogFormat( "Test,Property Time,Cached Property Time\n{0},{1},{2}\n{3},{4},{5}", "Array.Length", arrayLengthTime, cachedArrayLengthTime, "List.Count", listCountTime, cachedListCountTime ); } }

If you want to try out the test yourself, simply paste the above code into a TestScript.cs file in your Unity project's Assets directory and attach it to the main camera game object in a new, empty project. Then build in non-development mode for 64-bit processors and run it windowed at 640x480 with fastest graphics. I ran it that way on this machine:

- 2.3 Ghz Intel Core i7-3615QM

- Mac OS X 10.11.5

- Apple SSD SM256E, HFS+ format

- Unity 5.4.0f3, Mac OS X Standalone, x86_64, non-development

- 640x480, Fastest, Windowed

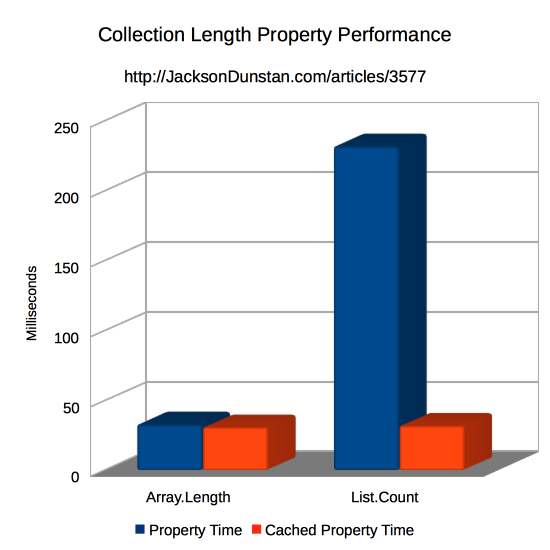

And here are the results I got:

| Test | Property Time | Cached Property Time |

|---|---|---|

| Array.Length | 33 | 31 |

| List.Count | 232 | 32 |

I would have expected that Array.Length would be a lot slower given all of the function calls and complexity we saw in the decompilation. Clearly there's some special array handling that's eliminating all that work because the "cached" and "non-cached" times are pretty much the same. The cached version is about 10% faster, but the numbers are so close over so many iterations that the difference is trivial.

List<T>.Count is a whole other story. The cached property time is right in line with the array times, which makes sense since the loop is exactly the same for 99,999,999 of the 100 million loops. If you forget to cache Count it's a whole other story. Suddenly the loop takes 7.25x longer! All of that due to one trivial property getter function which isn't specially handled like Array.Length.

In conclusion, we have a simple takeaway. Don't worry about caching Array.Length but definitely cache List<T>.Count if you've got a long list to iterate over!

Got any tricks for arrays and lists? Post a comment!

#1 by Josh Strike on September 12th, 2016 ·

I was curious about this, because in PHP it’s well known that using

for ($x=0;$x<count(array);$x++)

actually does cause the array's length to be calculated every time, and is significantly slower than caching the array length before running the loop.

I haven't switched from AS3/AIR to C#/Unity just yet, although I follow your posts out of interest. So I did a similar experiment in AS3, because I've never really checked on it. I always assumed it was faster to cache the length of an array, but I didn't know if maybe it didn't matter.

In fact it does. Starting with an array of 10,000,000 values, and looping through it simply adding the values to a single int, AS3 takes about 680ms on my machine if you use Array.length, whereas it takes 130ms if you use a fixed length.

This does make sense, because if you've ever tried to loop through an array in AS3 and spliced out values while doing so, while using the length of the array for the loop, you'll find that the loop respects the new length of the array after each iteration. This is / should be expected behavior if you're editing an array on the fly. I'd be curious — if the optimization you're describing is true — how many times through the loop do you get in C# if you pop a value out of the array on each iteration? Is it really caching the length of the array internally at the start of a loop? Because that seems like it could lead to dangerous / unpredictable behavior if you were altering the array in the loop itself. Sure there are speed drawbacks to using .length and there are bound to be issues if you start splicing arrays that you're currently looping through, but the logic of those two things together is sound. If you can't count on .length returning the current length (if it returns a cached length?) then you'd need to find some other way to determine it… And if it's not returning a cached length, then by what magic is it counting the array so fast?

#2 by Josh Strike on September 12th, 2016 ·

#3 by Josh Strike on September 12th, 2016 ·

Ooh. Related question. Does Array.length slow down and lose its magical cached length if the array is altered within the loop? Maybe that’s how they pulled the optimization, by using a checksum or something.

#4 by jackson on September 12th, 2016 ·

C# arrays have a fixed length, so you can’t add or remove elements after you’ve created them.

List<T>can grow and shrink though, so it’sCountcan vary after construction, such as in a loop. If you add or remove elements to it you should definitely make sure to update any cachedCountvariables to account for that. For example:#5 by Roger Miller on March 25th, 2019 ·

And thank you thank you!!! Just the data I needed mate! Keep up the good work.

#6 by CMaxo on August 23rd, 2019 ·

The actual difference between the Array & List speed when handling the Array’s Length and List’s Count is due to the way both are handled within Unity Garbage Collector.

In both case, it’s essentially better to cache the Length and Count value if they are repetitively used such as if you’re using for(…) loops statement.

When you’re using either Length or Count, you’re creating an unique integer variable on each step in the loops that has its own caching block. In fact, every single function creates its own block. If the same function with the same ID is called multiple time (for example, 1 function in 1 script that is kept at all time during the whole life of a scene), it will reload the same block each time. A block can only be resized bigger or destroyed by the Garbage Collector and the Garbage Collector only delete the blocks when the amount of memory is lacking. With time, Unity’s Garbage Collector management was greatly upgraded, but back during Unity 3-4 and prior version, LOTS of “veterans” developers though of Unity as Trash because they failed at understanding how to work “with” the Garbage collector and not “against it”.

Caching the Count or/and Length will create 2 blocks : 1 is the Count/Length block which will be trashed at some point whenever the Garbage Collector decides it and the other is the Function block which don’t just includes the cached value, but the whole part that uses it. If the Function’s content doesn’t change much in content based on conditions, the size of the block will be constant even if the Engine keep using it again and again and no new block will be created. As the block is constantly in uses, the Garbage Collector also doesn’t clear its cache unless the scene is changed.

When you use Count or Length within the for() Loop, both are considered as their own Function and they are stored, each time, with an unique cached ID that can’t be recovered by the Engine.

let’s say you do something like

public void Function1(){

for(int a = 0; a < List.Count; a++){

Function2();

}

This will create cached blocks as followed:

[Function 1 + a], [Function2], [List.Count] x .Count value

So if the List (or Array) has 1000, that's 1000 blocks with the List.Count integer value. It's each integer is 16bits. 1000 x 16 bits = 0.016Mb. That's may seem like a laughable amount of memory, but if compared to the cached version:

private int a = 0;

private int _Count = 0;

public void Function1(){

_Count = List.Count;

for(a = 0; a < List.Count; a++){

Function2();

}

This will create the following blocks:

[Function1], [a], [_Count], [Function2]

You might have noticed that I have also cached the for() integer. While you don't get anything within the function itself, you're getting the ability to reuse that specific Integer in other function that aren't running at the same time such as every for() loops of the same level.

You got 16 bits for the [a] block and another 16 bits for the [_Count] block.

That's 0.000032Mb of cached memory. You just saved 50000% of the cached memory, excluding the Engine's own micro-ID data that is stored with every blocks. Woohoo! The Garbage Collector is basically on vacation while you cache the data properly and the data is always fresh in the Flash Memory.

Here's a quick link to the Unity Optimization presentation back from 2016:

https://youtu.be/j4YAY36xjwE?t=1400

(I made the link to be at the part about the memory blocks being explained)

The only time I have found out that I couldn't be using a cached value was a very specific time where I had a for() loop in a function that was adding a Listener to a button generated in-game. Basically, there's a grid with a number of columns and rows determined by the player's choice. Each "tile" has a button (for selection) and I created an ID-based management system. I made it so that I could simply add the number of the for() integer to the specific Listener/function I created and that would always give me buttons with ID from 0 to whatever number of button on the grid AND the order of the ID/Button would always be from one corner to the other.

Because adding a listener uses a special part of the Unity's cache system, trying to cache the value elsewhere seems to be unable to access it unless it's within the same cached "Block".

#7 by jackson on August 23rd, 2019 ·

I have great news for you! The garbage collector only creates these blocks for managed types. That includes class instances, arrays, strings, and delegates but not primitives like

int, functions, orstructinstances that only contain primitives. This means you don’t need to worry about the GC at all when dealing with functions andintvariables like an array’sLengthorList<T>.Count.One way to know that this is the case is to look at 24:15 in the video and to notice that all of the examples are arrays, classes, and strings. Another is to do as you suggest and iterate over an array with 1000 elements with a local

intvariable or call another function. Open the profiler and look at the “GC Alloc” column and you’ll see a0. Now allocate a managed type like an array (e.g.new int[5]) and you’ll see a non-0value in the “GC Alloc” column.The great thing about this is that you don’t need to use fields for caching, which makes the code much more difficult to read, increases the size of

structandclassinstances, and leads to bugs when multiple functions contend for the field or a function forgets to reset it before using it. Instead, you can focus more on implementing your game’s features.Lastly, a couple of small points. The

inttype in C# is always 32 bits, not 16, and “Flash Memory” isn’t used in C# unless you happen to write code that reads from or writes to a file system that’s backed by that kind of storage technology.