NativeChunkedList<T>: Part 2

Last week we looked at a new native collection type: NativeChunkedList<T>. This type saved us a lot of memory and gave us a faster way to dynamically grow an array. Unfortunately, iterating over it was quite a lot slower. Today we’ll speed it up for both IJob and IJobParallelFor. In doing so, we’ll learn more about how to create custom Unity job types and about how IEnumerable and IEnumerator work.

Why Is It Slow?

Iterating over a NativeChunkedList<T> is slow for three reasons. First, at each iteration an indexing operation is performed. That operation internally performs an array index operation to get the chunk pointer and an array index operation to get the element of the chunk. Two indexes are simply slower than one, as is the case with NativeArray<T>, NativeList<T>, and NativeLinkedList<T>.

Second, indexing requires computing the array indexes for these two index operations. Computing the chunk index requires a division and computing the element index requires a modulus. Neither is required by NativeArray<T>, NativeList<T>, and NativeLinkedList<T>.

Third, when the loop reaches a new chunk it is unlikely that it will be in a CPU data cache. This cache miss will stall the CPU’s progress while it waits for the chunk data to be loaded from main memory. Since NativeArray<T>, NativeList<T>, and NativeLinkedList<T> are all single arrays, this issue never slowed them down.

Speedup Strategy

The first two issues can be solved by changing how we iterate. So far we’ve been using our random access tool—indexing—at each iteration of the loop. We can take advantage of knowing that what we’re really doing in an loop isn’t random: we’re accessing sequentially. With this knowledge, we can design a system that iterates faster.

The first step is to realize that we’ve been repeatedly looking up the same chunk pointer as we iterate over the elements of the chunk. We only get a new chunk pointer when we get to the next chunk. This means we can cache the chunk pointer while we’re iterating over the elements. If we do this, we’ll also naturally get rid of the division that was necessary to look up the chunk pointer index.

The second step is to get rid of the modulus by caching the element index. This allows us to simply increment the element index instead. The resulting loop looks something like this if it were inside of NativeChunkedList<T>:

public void IterateChunked() { // Iterate over the chunks for (int chunkIndex = 0; chunkIndex < m_State->m_ChunksLength; ++chunkIndex) { // Get the current chunk NativeChunkedListChunk chunk = m_State->m_Chunks[chunkIndex]; // Get the index of the last element in the chunk // This is less than the chunk length on the last chunk int endIndex = chunkIndex == m_State->m_ChunksLength - 1 ? (m_State->m_Length - 1) % m_State->mChunkLength : m_State->mChunkLength - 1; // Iterate over the elements of the chunk for (int elemIndex = 0; elemIndex <= endIndex; ++elemIndex) { T val = UnsafeUtility.ReadArrayValue<T>(chunk.m_Values, elemIndex); // Do something with val } } }

Notice that there are no more divides, modulus is only performed on the very last chunk, and the chunk pointers array is only indexed once per chunk. This seems like a good solution to the first two issues.

Enumerables and Enumerators

The critical problem with IterateChunked is that it has to be inside NativeChunkedList<T> so it can have raw access to its fields. Users of NativeChunkedList<T> therefore don’t have a way to write a version of this function or to inject their own code at the Do something with val line. This could be solved with a delegate call at that line, but that’s not usable with Burst-compiled jobs and would be counterproductively expensive. Instead, we’ll use the normal C# approach of IEnumerable and IEnumerator to make this loop accessible to users of the type.

Since the loop is two dimensional, we’re going to need to create four nested types:

ChunksEnumerable:IEnumerablefor chunksChunksEnumerator:IEnumeratorfor chunksChunkEnumerable:IEnumerablefor elements of a chunkChunkEnumerator:IEnumeratorfor elements of a chunk

Let’s go through them one at a time:

[StructLayout(LayoutKind.Sequential)] public unsafe struct ChunksEnumerable : IEnumerable<IEnumerable<T>> , IEnumerable , IDisposable { private readonly NativeChunkedList<T> m_List; private readonly int m_StartChunksIndex; private readonly int m_StartChunkIndex; private readonly int m_EndChunksIndex; private readonly int m_EndChunkIndex; internal ChunksEnumerable( NativeChunkedList<T> list, int startChunksIndex, int startChunkIndex, int endChunksIndex, int endChunkIndex) { m_List = list; m_StartChunksIndex = startChunksIndex; m_StartChunkIndex = startChunkIndex; m_EndChunksIndex = endChunksIndex; m_EndChunkIndex = endChunkIndex; } public ChunksEnumerator GetEnumerator() { return new ChunksEnumerator( m_List, m_StartChunksIndex, m_StartChunkIndex, m_EndChunksIndex, m_EndChunkIndex); } IEnumerator<IEnumerable<T>> IEnumerable<IEnumerable<T>>.GetEnumerator() { return GetEnumerator(); } IEnumerator IEnumerable.GetEnumerator() { return GetEnumerator(); } public void Dispose() { } }

This type mostly exists as a middle-man between NativeChunkedList<T> and ChunksEnumerator<T>. It takes the parameters that ChunksEnumerator<T> needs in its own constructor, stores them as fields, and passes them to the constructor of ChunksEnumerator<T> in GetEnumerator. There are three GetEnumerator functions here: one to satisfy the IEnumerable interface, on to satisfy the IEnumerable<T> interface, and one that returns the actual enumerator type so the compiler has one to call that won’t cause boxing and GC. We also need to include a no-op Dispose to satisfy the IDisposable interface.

This code has an upgrade to the simple IterateChunked loop in that it can iterate over any range of values, not just starting at index 0 and going through index Length-1. This is captured in the m_StartChunksIndex, m_StartChunkIndex, m_EndChunksIndex, and m_EndChunkIndex variables which control the ranges of iteration for the chunk pointers ([m_StartChunksIndex, m_EndChunksIndex)) and chunk elements ([m_StartChunkIndex, m_EndChunkIndex)).

Now let’s look at ChunksEnumerator<T>:

[StructLayout(LayoutKind.Sequential)] public unsafe struct ChunksEnumerator : IEnumerator<IEnumerable<T>> , IEnumerator , IDisposable { private readonly NativeChunkedList<T> m_List; private int m_Index; private readonly int m_StartChunksIndex; private readonly int m_StartChunkIndex; private readonly int m_EndChunksIndex; private readonly int m_EndChunkIndex; internal ChunksEnumerator( NativeChunkedList<T> list, int startChunksIndex, int startChunkIndex, int endChunksIndex, int endChunkIndex) { m_List = list; m_Index = startChunksIndex - 1; m_StartChunksIndex = startChunksIndex; m_StartChunkIndex = startChunkIndex; m_EndChunksIndex = endChunksIndex; m_EndChunkIndex = endChunkIndex; } public bool MoveNext() { m_List.RequireReadAccess(); m_Index++; return m_Index <= m_EndChunksIndex; } public ChunkEnumerable Current { get { m_List.RequireReadAccess(); // Just one chunk NativeChunkedListState* state = m_List.m_State; void* chunk = state->m_Chunks[m_Index].m_Values; int startChunkIndex; int endChunkIndex; if (m_StartChunksIndex == m_EndChunksIndex) { startChunkIndex = m_StartChunkIndex; endChunkIndex = m_EndChunkIndex; } // Start chunk else if (m_Index == m_StartChunksIndex) { startChunkIndex = m_StartChunkIndex; endChunkIndex = state->m_ChunkLength - 1; } // End chunk else if (m_Index == m_EndChunksIndex) { startChunkIndex = 0; endChunkIndex = m_EndChunkIndex; } // Middle chunk else { startChunkIndex = 0; endChunkIndex = state->m_ChunkLength - 1; } // Create the enumerator #if ENABLE_UNITY_COLLECTIONS_CHECKS int baseIndex = state->m_ChunkLength * m_Index; return new ChunkEnumerable( m_List, chunk, startChunkIndex, endChunkIndex, baseIndex); #else return new ChunkEnumerable( m_List, chunk, startChunkIndex, endChunkIndex); #endif } } IEnumerable<T> IEnumerator<IEnumerable<T>>.Current { get { return Current; } } object IEnumerator.Current { get { return Current; } } public void Reset() { m_List.RequireReadAccess(); m_Index = m_StartChunksIndex - 1; } public void Dispose() { } }

This type is an IEnumerator<IEnumerable<T>> as it enumerates over enumerables of elements. Its MoveNext is therefore iterating over the chunk pointers array by simply incrementing m_Index: no division necessary. The Current property determines the index range of the chunk to enumerate over. This involves a few if statements, but that’s not too bad since this only happens once per chunk.

In addition to computing the index range, the base index is also computed and passed to the ChunkEnumerable constructor. This is a whole-list index, not an index into the chunk pointers array or the chunk’s elements. It’s useful for range checks which we’ll see later on in ChunkEnumerator. In the meantime, let’s look at ChunkEnumerable:

[StructLayout(LayoutKind.Sequential)] public unsafe struct ChunkEnumerable : IEnumerable<T> , IEnumerable , IDisposable { private readonly NativeChunkedList<T> m_List; private readonly void* m_Chunk; private readonly int m_StartChunkIndex; private readonly int m_EndChunkIndex; #if ENABLE_UNITY_COLLECTIONS_CHECKS private readonly int m_BaseIndex; #endif internal ChunkEnumerable( NativeChunkedList<T> list, void* chunk, int startChunkIndex, int endChunkIndex #if ENABLE_UNITY_COLLECTIONS_CHECKS , int baseIndex #endif ) { m_List = list; m_Chunk = chunk; m_StartChunkIndex = startChunkIndex; m_EndChunkIndex = endChunkIndex; #if ENABLE_UNITY_COLLECTIONS_CHECKS m_BaseIndex = baseIndex; #endif } public ChunkEnumerator GetEnumerator() { return new ChunkEnumerator( m_List, m_Chunk, m_StartChunkIndex, m_EndChunkIndex #if ENABLE_UNITY_COLLECTIONS_CHECKS , m_BaseIndex #endif ); } IEnumerator<T> IEnumerable<T>.GetEnumerator() { return GetEnumerator(); } IEnumerator IEnumerable.GetEnumerator() { return GetEnumerator(); } public void Dispose() { } }

Like ChunksEnumerable, this is another middle-man whose entire purpose is to take the parameters ChunkEnumerator needs, store them as fields, and pass them to the ChunkEnumerator constructor in GetEnumerator.

Finally, let’s look at ChunkEnumerator to see the code that actually accesses the list’s elements:

[StructLayout(LayoutKind.Sequential)] public unsafe struct ChunkEnumerator : IEnumerator<T> , IEnumerator , IDisposable { private NativeChunkedList<T> m_List; private readonly void* m_Chunk; private int m_Index; private readonly int m_StartChunkIndex; private readonly int m_EndChunkIndex; #if ENABLE_UNITY_COLLECTIONS_CHECKS private readonly int m_BaseIndex; #endif internal ChunkEnumerator( NativeChunkedList<T> list, void* chunk, int startChunkIndex, int endChunkIndex #if ENABLE_UNITY_COLLECTIONS_CHECKS , int baseIndex #endif ) { m_List = list; m_Chunk = chunk; m_Index = startChunkIndex - 1; m_StartChunkIndex = startChunkIndex; m_EndChunkIndex = endChunkIndex; #if ENABLE_UNITY_COLLECTIONS_CHECKS m_BaseIndex = baseIndex; #endif } public bool MoveNext() { m_Index++; return m_Index <= m_EndChunkIndex; } public T Current { get { m_List.RequireReadAccess(); #if ENABLE_UNITY_COLLECTIONS_CHECKS m_List.RequireIndexInSafetyRange(m_BaseIndex + m_Index); #endif return UnsafeUtility.ReadArrayElement<T>(m_Chunk, m_Index); } [WriteAccessRequired] set { m_List.RequireWriteAccess(); #if ENABLE_UNITY_COLLECTIONS_CHECKS m_List.RequireIndexInSafetyRange(m_BaseIndex + m_Index); #endif UnsafeUtility.WriteArrayElement(m_Chunk, m_Index, value); } } object IEnumerator.Current { get { return Current; } } public void Reset() { m_Index = m_StartChunkIndex - 1; } public void Dispose() { } }

Like ChunksEnumerator, this MoveNext can also increment to go to the next element: no modulus required. The actual list element access is via the Current property which simply reads from or writes to the chunk array. This is also where the base index is used to compute the whole-list index and to check that it is inside the safety range of the list.

Lastly, we need a way for users of NativeChunkedList<T> to get a ChunksEnumerable. To do this, we’ll add two features to the NativeChunkedList<T> public API:

public ChunksEnumerable Chunks { get { return new ChunksEnumerable( this, 0, 0, m_State->m_ChunksLength - 1, (m_State->m_Length - 1) % m_State->m_ChunkLength); } } public ChunksEnumerable GetChunksEnumerable( int startIndex, int endIndex) { RequireReadAccess(); int lastIndex = endIndex - 1; RequireIndicesInBounds(startIndex, lastIndex); return new ChunksEnumerable( this, startIndex / m_State->m_ChunkLength, startIndex % m_State->m_ChunkLength, lastIndex / m_State->m_ChunkLength, lastIndex % m_State->m_ChunkLength); }

The Chunks property creates a ChunksEnumerable for every element in the list while GetChunksEnumerable creates a ChunksEnumerable for a sub-range of the list. These require some division and modulus operations, but it’s only needed once per iteration so the overall cost should be quite low compared to the ensuing loop over the ChunksEnumerable.

Using Enumerables and Enumerator

Usage of the above interface is simple as the enumerable and enumerator types hide all the underlying work and enable the foreach loop by complying with the IEnumerable<T> and IEnumerator<T> interfaces. Here’s how iteration works:

foreach (var e in List.Chunks) { foreach (int element in e) { // Do something with element } }

To iterate without foreach, which isn’t usable in a Burst-compiled job due its automatic inclusion of try and catch, use a normal for loop like this:

for (var chunks = List.Chunks.GetEnumerator(); chunks.MoveNext(); ) { for (var chunk = chunks.Current.GetEnumerator(); chunk.MoveNext(); ) { int element = chunk.Current; // Do something with element } }

To iterate over a sub-range, use GetEnumerator like this:

for ( var chunks = List.GetChunksEnumerable(startIndex, endIndex).GetEnumerator(); chunks.MoveNext();) { for ( var chunk = chunks.Current.GetEnumerator(); chunk.MoveNext();) { int element = chunk.Current; // Do something with element } }

This means we can change the IJob from the performance test from this:

[BurstCompile] struct ChunkedListIterateJob : IJob { public NativeChunkedList<int> List; public NativeArray<int> Sum; public void Execute() { for (int i = 0; i < List.Length; ++i) { Sum[0] += List[i]; } } }

To this:

[BurstCompile] struct ChunkedListIterateJob : IJob { public NativeChunkedList<int> List; public NativeArray<int> Sum; public void Execute() { for ( var chunks = List.Chunks.GetEnumerator(); chunks.MoveNext(); ) { for ( var chunk = chunks.Current.GetEnumerator(); chunk.MoveNext(); ) { Sum[0] += chunk.Current; } } } }

The key difference is that we’re now using enumerables and enumerators in a two-dimensional loop instead of the indexer in a one-dimensional loop. It’s a little more code, but still a rather simple way to iterate.

ParallelFor Jobs

In an IJobParallelFor, our Execute function is passed the index of the element to operate on. That would seem to force us to use the indexer rather than the new enumerables and enumerators. This isn’t strictly the case though. Recall that IJobParallelFor batches work by looping over a range of indices and calling the job’s Execute function. If we create our own job type, we can simply pass the range of indices and let the job’s Execute function do the looping. That’s ideal for this situation since we have GetChunksEnumerable which operates on ranges of indices.

Here’s how the custom job interface and extensions look:

[JobProducerType(typeof(IJobParallelForRangedExtensions.ParallelForJobStruct<>))] public interface IJobParallelForRanged { void Execute(int startIndex, int endIndex); } public static class IJobParallelForRangedExtensions { internal struct ParallelForJobStruct<TJob> where TJob : struct, IJobParallelForRanged { public static IntPtr jobReflectionData; public static IntPtr Initialize() { if (jobReflectionData == IntPtr.Zero) { jobReflectionData = JobsUtility.CreateJobReflectionData( typeof(TJob), JobType.ParallelFor, (ExecuteJobFunction)Execute); } return jobReflectionData; } public delegate void ExecuteJobFunction( ref TJob data, IntPtr additionalPtr, IntPtr bufferRangePatchData, ref JobRanges ranges, int jobIndex); public static unsafe void Execute( ref TJob jobData, IntPtr additionalPtr, IntPtr bufferRangePatchData, ref JobRanges ranges, int jobIndex) { int startIndex; int endIndex; while (JobsUtility.GetWorkStealingRange( ref ranges, jobIndex, out startIndex, out endIndex)) { jobData.Execute(startIndex, endIndex); } } } unsafe public static JobHandle ScheduleRanged<T>( this T jobData, int valuesLength, int innerloopBatchCount, JobHandle dependsOn = new JobHandle()) where T : struct, IJobParallelForRanged { var scheduleParams = new JobsUtility.JobScheduleParameters( UnsafeUtility.AddressOf(ref jobData), ParallelForJobStruct<T>.Initialize(), dependsOn, ScheduleMode.Batched); return JobsUtility.ScheduleParallelFor( ref scheduleParams, valuesLength, innerloopBatchCount); } unsafe public static void RunRanged<T>( this T jobData, int valuesLength) where T : struct, IJobParallelForRanged { var scheduleParams = new JobsUtility.JobScheduleParameters( UnsafeUtility.AddressOf(ref jobData), ParallelForJobStruct<T>.Initialize(), new JobHandle(), ScheduleMode.Run); JobsUtility.ScheduleParallelFor( ref scheduleParams, valuesLength, valuesLength); } }

This defines a new job interface: IJobParallelForRanged. The difference compared to the regular IJobParallelFor is that its Execute takes a range of indices rather than a single index. We then define the boilerplate Initialize function to reflect on job type implementing this interface and cache that result. We also define the Execute function that executes a batch by simply passing the range of indices to the job’s Execute function. Lastly, we define the ExecuteRanged and RunRanged extension functions for running the job. These have the same signature as IJobParallelFor.Execute and IJobParallelFor.Run, so we need to name them with Ranged to avoid naming collisions as C# doesn’t allow us to overload based on where constraints.

Now that we have this custom job type interface we can convert the performance test’s IJobParallelFor from this:

[BurstCompile] struct ChunkedListIterateJobParallelFor : IJobParallelFor { public NativeChunkedList<int> List; public NativeArray<int> Sum; public void Execute(int index) { Sum[0] += List[index]; } }

To this:

[BurstCompile] struct ChunkedListIterateJobParallelFor : IJobParallelForRanged { public NativeChunkedList<int> List; public NativeArray<int> Sum; public void Execute(int startIndex, int endIndex) { for ( var chunks = List.GetChunksEnumerable(startIndex, endIndex).GetEnumerator(); chunks.MoveNext();) { for ( var chunk = chunks.Current.GetEnumerator(); chunk.MoveNext();) { Sum[0] += chunk.Current; } } } }

Like with the IJob, we’re no longer using the indexer and now using a doubly-nested loop of the enumerables and enumerators. The loops look just like in the IJob except that we use the GetChunksEnumerable method instead of the Chunks property to create the enumerator.

Performance Testing

Now let’s run the performance test to see if all this work was worth it. I ran using this environment, which is identical to last week’s test environment:

- 2.7 Ghz Intel Core i7-6820HQ

- macOS 10.14

- Unity 2018.2.16f1

- com.unity.collections package 0.0.9-preview.10

- macOS Standalone

- .NET 4.x scripting runtime version and API compatibility level

- IL2CPP

- Non-development

- 640×480, Fastest, Windowed

Here are the results I got:

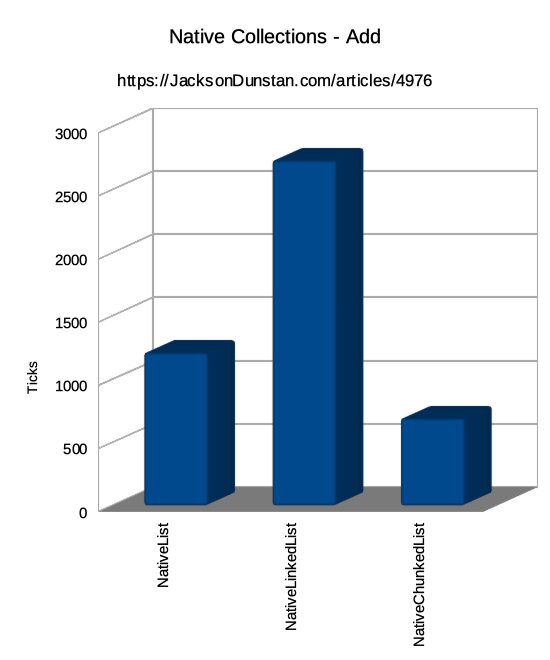

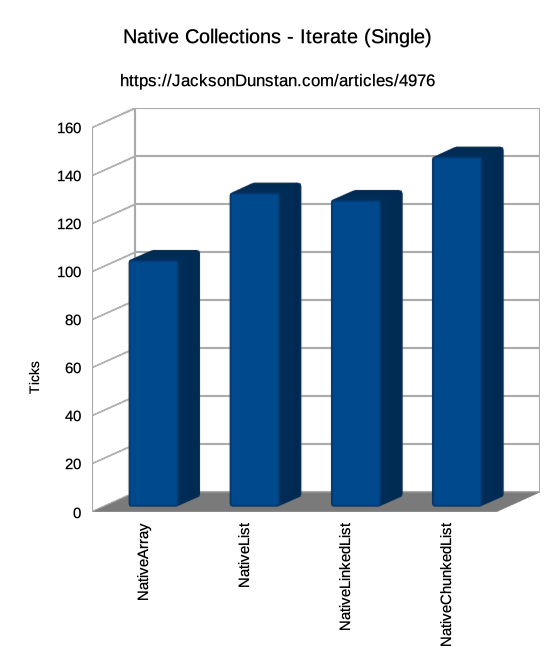

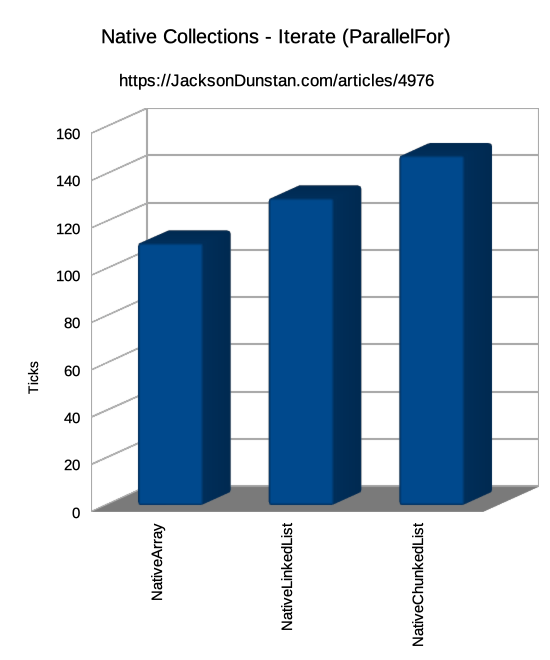

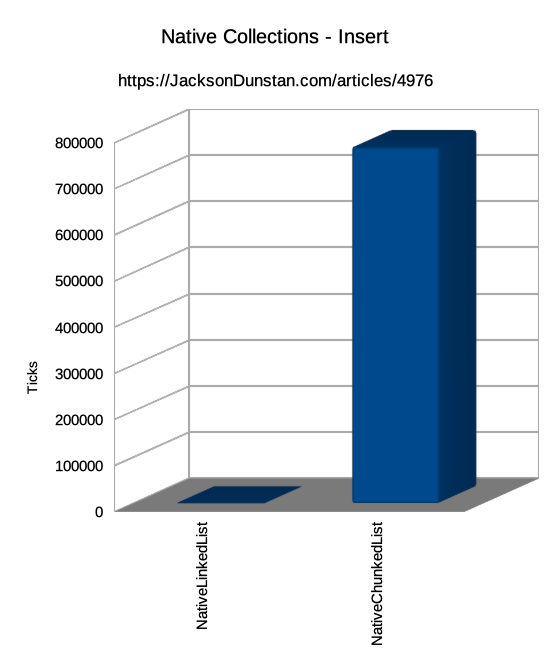

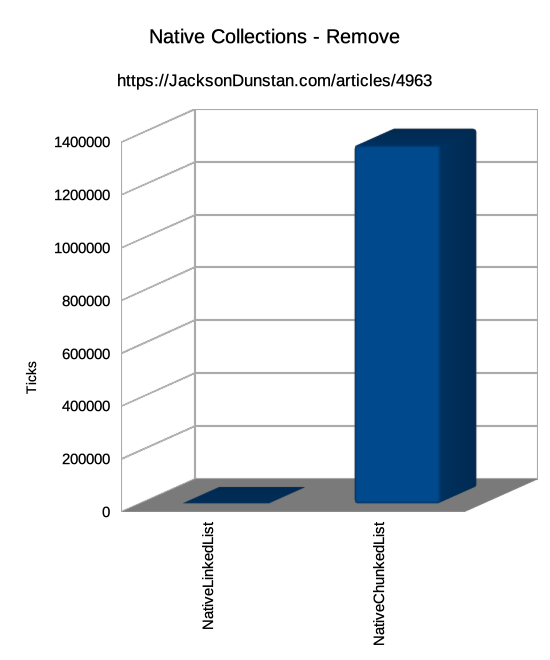

| Operation | Job Type | NativeArray | NativeList | NativeLinkedList | NativeChunkedList |

|---|---|---|---|---|---|

| Add | Single | n/a | 1212 | 2734 | 691 |

| Iterate | Single | 103 | 131 | 128 | 146 |

| Iterate | ParallelFor | 111 | n/a | 130 | 148 |

| Insert | Single | n/a | n/a | 655 | 773624 |

| Remove | Single | n/a | n/a | 662 | 1357340 |

The performance of Add, Insert, and Remove is, predictably, unchanged. Iteration, which we’ve focused on in this article, is now much faster! It used to take 2-3x longer to iterate over a NativeChunkedList<T> than any other native collection type. Now it only takes slightly longer: 11-42% for IJob and 14-33% for IJobParallelFor. This is likely due to the inter-chunk CPU data cache miss described above.

Conclusion

By recognizing that we’re iterating linearly and not indexing randomly, we’ve been able to create a good solution for the more constrained problem. We now have custom enumerables and enumerators plus a custom job type that allow us to much more efficiently (2-3x!) iterate over a NativeChunkedList<T>. The penalty is now low enough that iteration speed is no longer a major reason to avoid using NativeChunkedList<T>. The full source code is now available on the GitHub project, so feel free to check it out or incorporate it into your own projects.