Native Memory Allocators: More Than Just a Lifetime

Unity provides different types of native memory allocators via the Allocator enum: Persistent, TempJob, and Temp. These names indicate the lifetime of the memory allocation: forever, a few frames, and one frame. They also indicate an underlying algorithm to perform the allocation and deallocation. These algorithms have varied results on our code that uses them, and that’s what we’ll explore in today’s article.

The Test

Today we’ll be observing a few properties of Unity’s allocators. We’ll do this using a test script that allocates 1000 four-byte blocks with four-byte alignment. We’ll measure the time it takes to perform these allocations and the time it takes to deallocate them. Then we’ll generate a report showing the distance between the allocations in a couple of ways.

Here’s the source code for the test script:

using System.Collections.Generic; using System.Diagnostics; using System.IO; using System.Text; using Unity.Collections; using Unity.Collections.LowLevel.Unsafe; using UnityEngine; class TestScript : MonoBehaviour { void Start() { TestAll(1000, 4, 4); } private static void TestAll(int num, int size, int align) { Test(Allocator.Temp, num, size, align); Test(Allocator.TempJob, num, size, align); Test(Allocator.Persistent, num, size, align); } static unsafe void Test(Allocator allocator, int num, int size, int align) { // Allocate and deallocate void*[] allocs = new void*[num]; Stopwatch stopwatch = Stopwatch.StartNew(); for (int i = 0; i < num; ++i) { allocs[i] = UnsafeUtility.Malloc(size, align, allocator); } long allocateTicks = stopwatch.ElapsedTicks; stopwatch.Restart(); for (int i = 0; i < num; ++i) { UnsafeUtility.Free(allocs[i], allocator); } long deallocateTicks = stopwatch.ElapsedTicks; StringBuilder timeReport = new StringBuilder(256); timeReport.Append("Operation,Ticks\n"); timeReport.Append("Allocate,").Append(allocateTicks).Append('\n'); timeReport.Append("Deallocate,").Append(deallocateTicks); string timesFile = allocator + "_times.csv"; string timePath = Path.Combine(Application.dataPath, timesFile); File.WriteAllText(timePath, timeReport.ToString()); // Distance report StringBuilder distReport = new StringBuilder(num * 32); distReport.Append("Distance\n"); long GetDistance(int index) { long curBegin = (long)allocs[index]; long prevEnd = (long)allocs[index - 1] + size; return curBegin - prevEnd; } for (int i = 1; i < num; ++i) { long distance = GetDistance(i); distReport.Append(distance); distReport.Append('\n'); } string distancesFile = allocator + "_distances.csv"; string distPath = Path.Combine(Application.dataPath, distancesFile); File.WriteAllText(distPath, distReport.ToString()); // Bucket report Dictionary<long, int> buckets = new Dictionary<long, int>(num); for (int i = 1; i < num; ++i) { long distance = GetDistance(i); if (buckets.TryGetValue(distance, out int count)) { buckets[distance] = count + 1; } else { buckets[distance] = 1; } } StringBuilder bucketReport = new StringBuilder(num * 32); bucketReport.Append("Distance,Count\n"); foreach (KeyValuePair<long, int> pair in buckets) { bucketReport.Append(pair.Key).Append(',').Append(pair.Value).Append('\n'); } string bucketsFile = allocator + "_buckets.csv"; string bucketPath = Path.Combine(Application.dataPath, bucketsFile); File.WriteAllText(bucketPath, bucketReport.ToString()); } }

Performance

First, let’s look at the performance of allocation and deallocation across all three allocator types. I ran the test in this environment:

- 2.7 Ghz Intel Core i7-6820HQ

- macOS 10.14.6

- Unity 2019.2.9f1

- macOS Standalone

- .NET 4.x scripting runtime version and API compatibility level

- IL2CPP

- Non-development

- 640×480, Fastest, Windowed

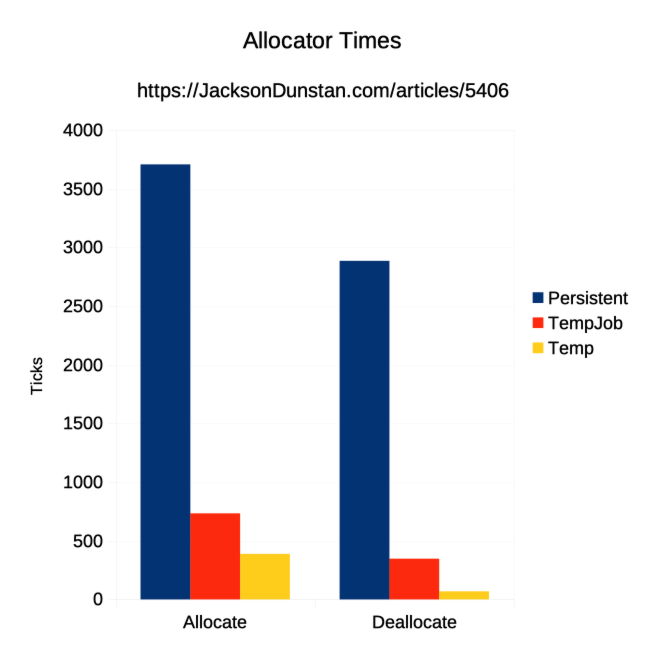

And here are the results I got:

The Persistent allocator is far slower than either TempJob or Temp. It’s roughly 5x slower to allocate and 8x slower to deallocate compared to TempJob. TempJob, in turn, takes about 2x longer to allocate and 5x longer to deallocate than Temp.

Allocator.Persistent

Now let’s look at the results for the Persistent allocator in terms of distance between successive allocations. That is to say, how far is the memory for one allocation from the memory for the previous allocation? This can be very important for CPU cache coherence in the case of a native collection like NativeChunkedList and can give valuable insight into the underlying allocation algorithm.

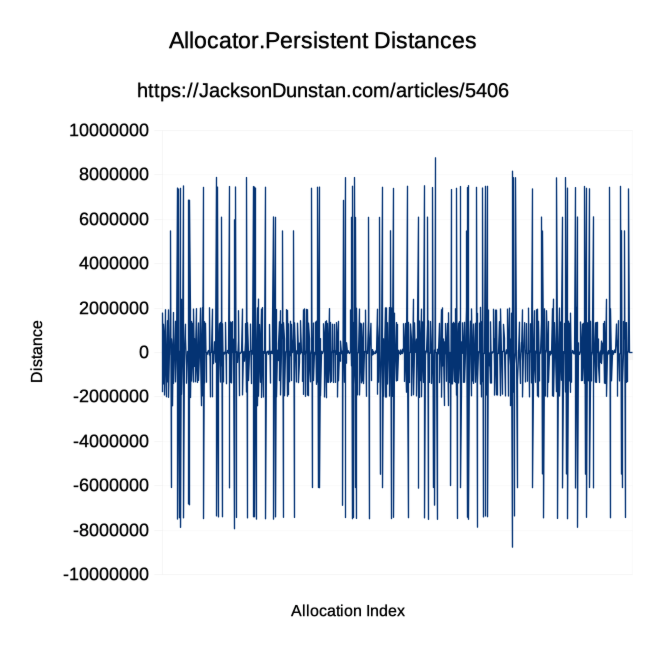

First, let’s look at a graph showing the distance from the previous allocation:

The graph shows the allocations are all over the place. Most of them are within 2 MB of the previous allocation, but many go out as far as 8 MB away. There’s very little consistency here.

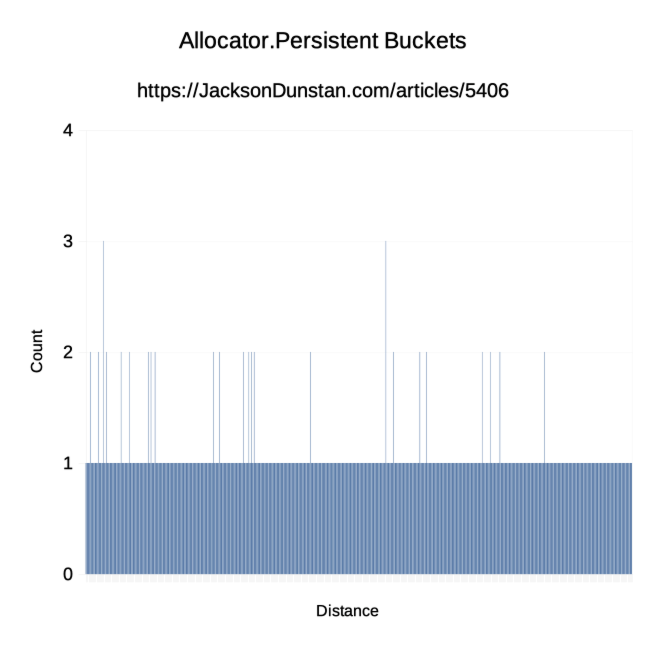

Next, let’s group together allocations that are the same distance away from the previous allocation into buckets and count the number of allocations in each bucket. Here’s how that looks:

We can see that nearly every distance is unique since almost all of the buckets have just one allocation in them. A few here and there have two and a couple have three, but overall the bucket counts confirm how inconsistent this allocator is.

Allocator.TempJob

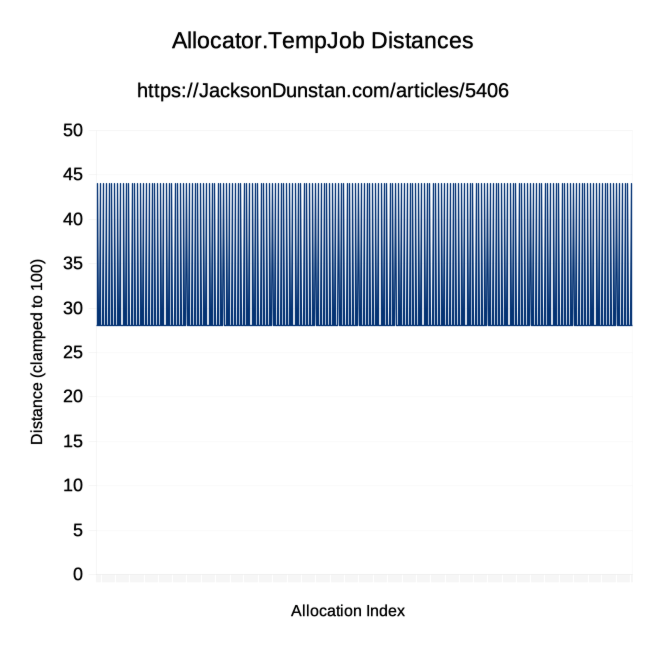

Next, let’s look at the TempJob allocator using the same two graphs. First, here are the distances:

This is remarkably consistent compared to the Persistent allocator! We’re either getting a distance of 28 or 44 every single time. There are runs of 3-5 distances of 28 and then a 44, over and over throughout the 1000 allocations in the test.

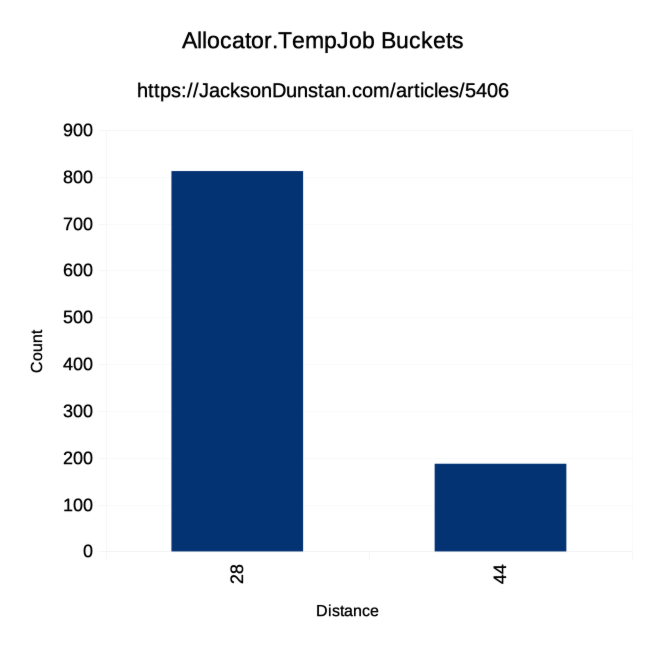

Now let’s look at the buckets to confirm:

Here we see just the two buckets: 28 and 44. There are more in the 28 bucket, which corresponds with the runs of 3-5 at that distance.

Allocator.Temp

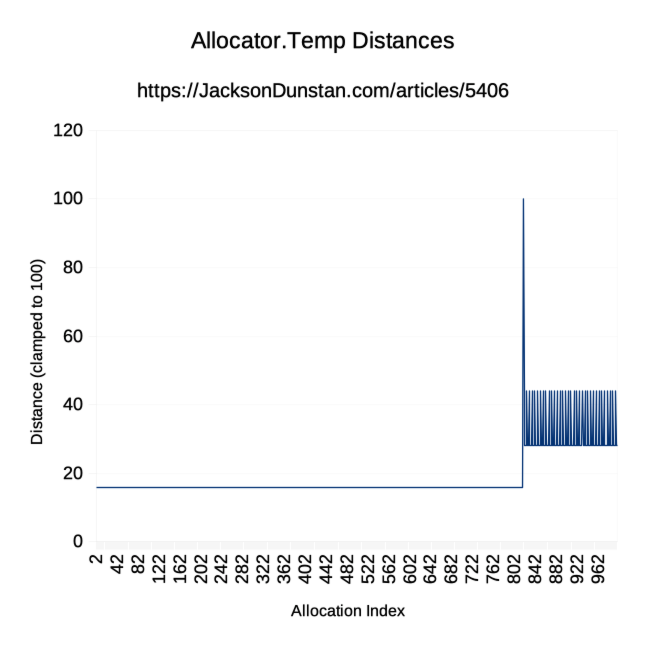

The final allocator is Temp. Let’s look at its distances graph, which has been clamped to 100 due to a single very large value we’ll discuss in a minute:

There’s a really obvious discontinuity in this graph at allocation 819. Until that point, every single allocation was exactly 16 bytes after the end of the previous allocation. Then, suddenly, the next allocation is 140,685,055,869,340 bytes later. That puts that allocation in an entirely different area of memory, but then we immediately return to a more predictable pattern. As with the TempJob allocator, we now start seeing runs of 28 bytes followed by a 44 byte distance. It’s as though the Temp allocator runs out of space and falls back on the TempJob allocator at that point.

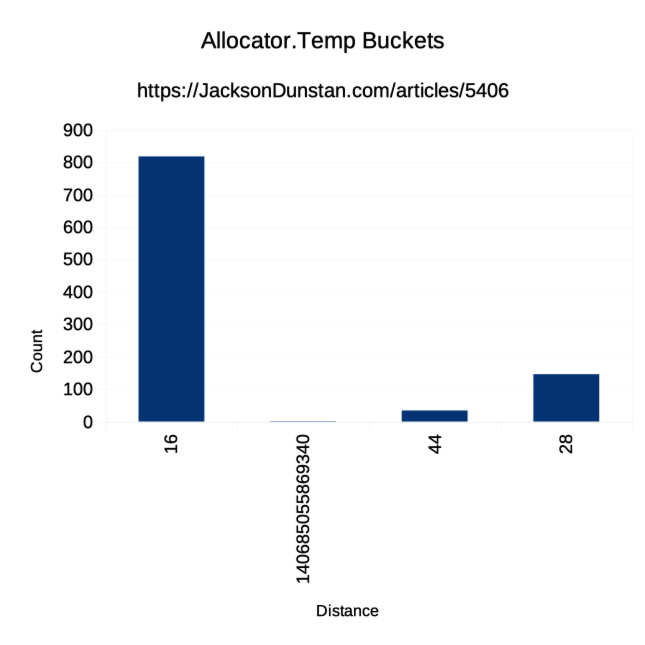

Now let’s see the buckets for Temp:

The majority of the allocations were in the first 818 of 1000 and they all fell into the 16 byte bucket. Then we hit the 140,685,055,869,340 byte discontinuity, but exactly once. After that we see the 44 and 28 byte buckets with a similar ratio to the TempJob buckets graph.

Conclusion

Today we’ve observed some interesting properties of Unity’s allocators. We’ve seen that both allocation and deallocation performance is vastly improved when avoiding the Persistent allocator in favor of TempJob or especially Temp.

We’ve also looked at the distance between allocations and found that Persistent is all over the map, but TempJob and Temp are quite consistent. This may be useful for cache coherence when performing sequential allocations and also gives some insight into how the allocators work.

Persistent is likely a general-purpose heap allocator such as C’s malloc function while Temp and TempJob are likely not. Temp also seems to fall back to TempJob, or something like it, when it runs out of memory around the 16 KB mark.

Finally, we’ve seen that the allocators are named after the intended lifecycle of their memory but do have different underlying behaviors beyond just lifecycle. Knowing these behaviors may come in handy when using native collections such as NativeArray<T>.

#1 by d33ds on November 4th, 2019 ·

Do you think the Temp allocation will be much faster if it doesn’t have to go scan for another location after the 818th?